Bluff: Interactively Deciphering Adversarial Attacks on Deep Neural Networks

( * Authors contributed equally )

Abstract

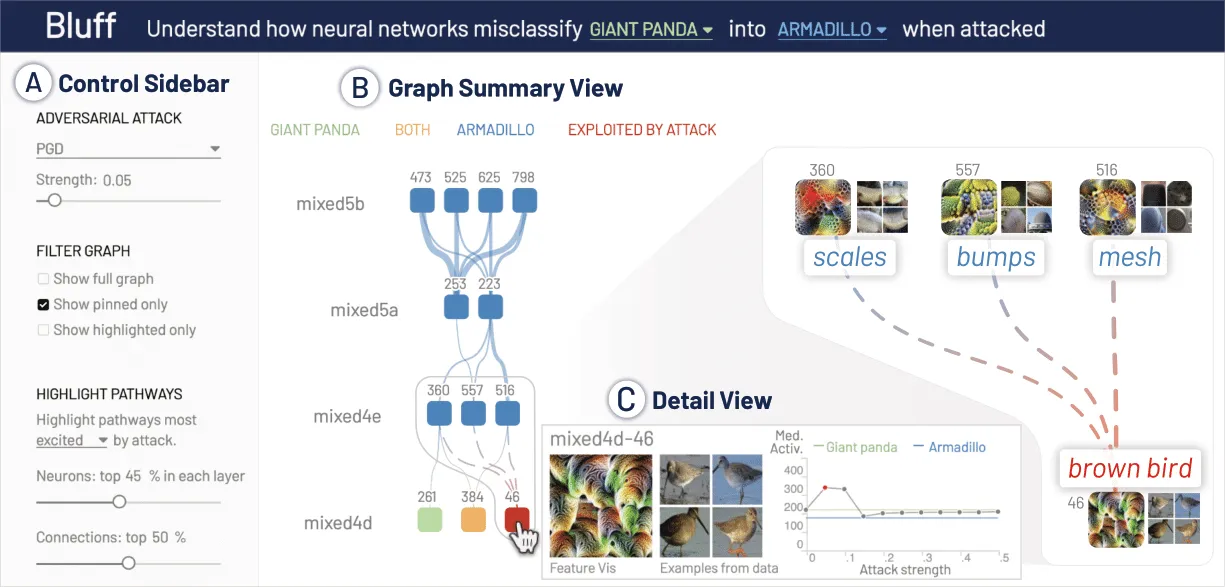

Deep neural networks (DNNs) are now commonly used in many domains. However, they are vulnerable to adversarial attacks: carefully crafted perturbations on data inputs that can fool a model into making incorrect predictions. Despite significant research on developing DNN attack and defense techniques, people still lack an understanding of how such attacks penetrate a model's internals. We present Bluff, an interactive system for visualizing, characterizing, and deciphering adversarial attacks on vision-based neural networks. Bluff allows people to flexibly visualize and compare the activation pathways for benign and attacked images, revealing mechanisms that adversarial attacks employ to inflict harm on a model. Bluff is open-sourced and runs in modern web browsers.

Citation

Bluff: Interactively Deciphering Adversarial Attacks on Deep Neural Networks

@article{dasBluffInteractivelyDeciphering2020,

title={Bluff: Interactively Deciphering Adversarial Attacks on Deep Neural Networks},

author={Das, Nilaksh and Park, Haekyu and Wang, Zijie J and Hohman, Fred and Firstman, Robert and Rogers, Emily and Chau, Duen Horng},

booktitle={IEEE Visualization Conference (VIS)},

publisher={IEEE},

year={2020}

}

Chau.webp)