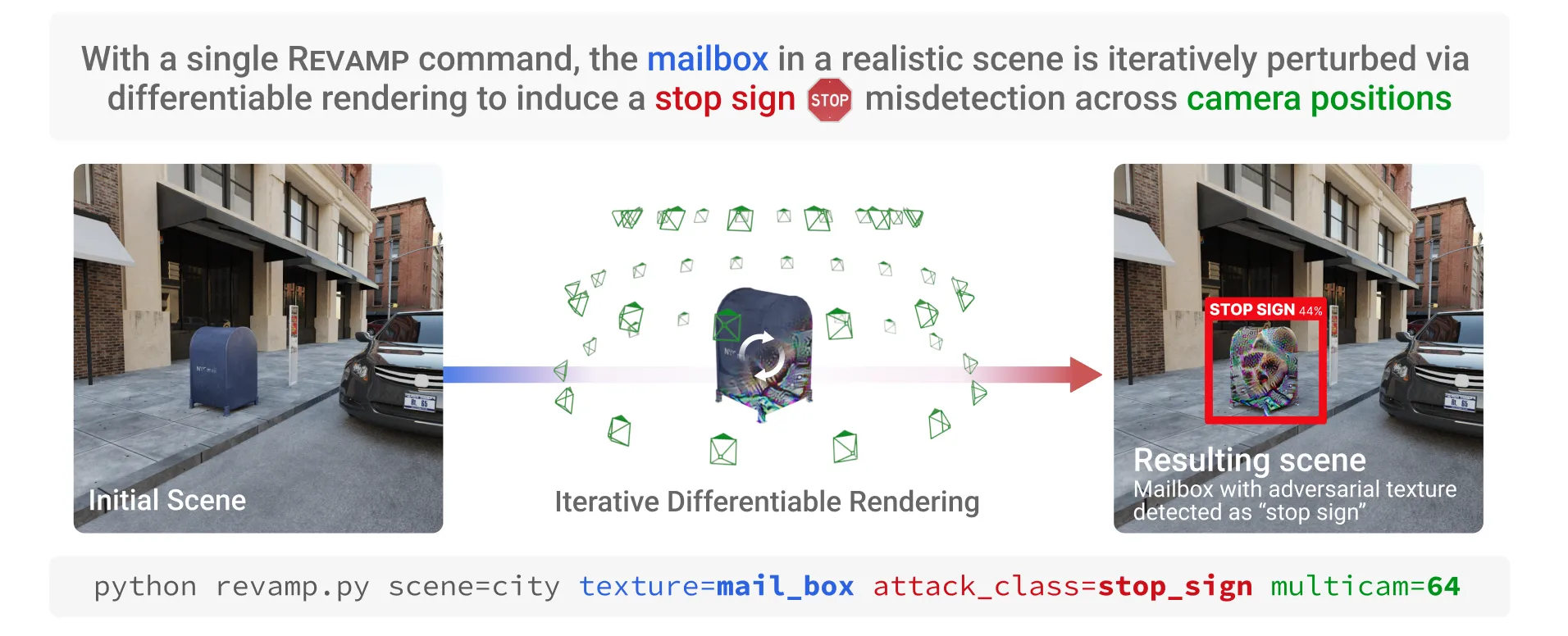

REVAMP: Automated Simulations of Adversarial Attacks on Arbitrary Objects in Realistic Scenes

Abstract

Deep Learning models, such as those used in an autonomous vehicle are vulnerable to adversarial attacks where an attacker could place an adversarial object in the environment, leading to mis-classification. Generating these adversarial objects in the digital space has been extensively studied, however successfully transferring these attacks from the digital realm to the physical realm has proven challenging when controlling for real-world environmental factors. In response to these limitations, we introduce REVAMP, an easy-to-use Python library that is the first-of-its-kind tool for creating attack scenarios with arbitrary objects and simulating realistic environmental factors, lighting, reflection, and refraction. REVAMP enables researchers and practitioners to swiftly explore various scenarios within the digital realm by offering a wide range of configurable options for designing experiments and using differentiable rendering to reproduce physically plausible adversarial objects. We will demonstrate and invite the audience to try REVAMP to produce an adversarial texture on a chosen object while having control over various scene parameters. The audience will choose a scene, an object to attack, the desired attack class, and the number of camera positions to use. Then, in real time, we show how this altered texture causes the chosen object to be mis-classified, showcasing the potential of REVAMP in real-world scenarios. REVAMP is open-source and available at https://github.com/poloclub/revamp.

Citation

REVAMP: Automated Simulations of Adversarial Attacks on Arbitrary Objects in Realistic Scenes

@inproceedings{

hull2024revamp,

title={Revamp: Automated Simulations of Adversarial Attacks on Arbitrary Objects in Realistic Scenes},

author={Matthew Daniel Hull and Zijie J. Wang and Duen Horng Chau},

booktitle={The Second Tiny Papers Track at ICLR 2024},

year={2024},

url={https://openreview.net/forum?id=XCLrySEUBe}

}

Chau.webp)